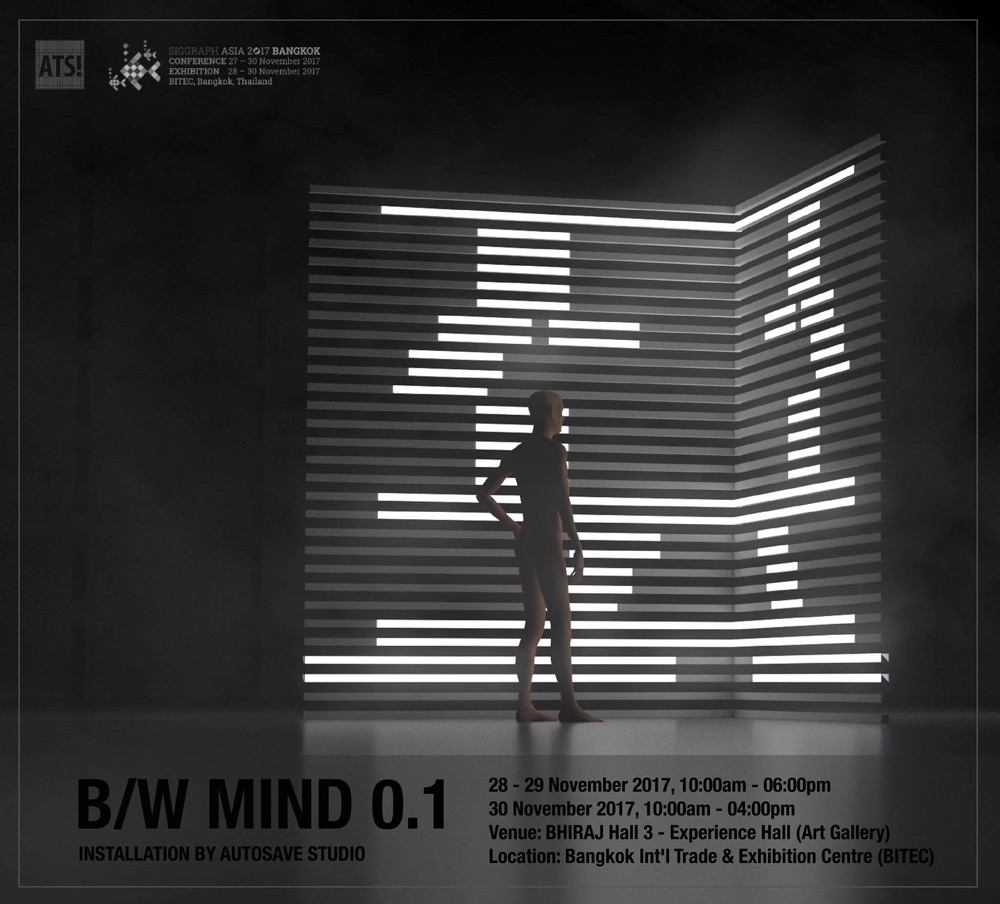

I personally know Siggraph since high school, and actually applied for a volunteer to Siggraph Japan once, but no chance. To me, it is a biggest technology and computer graphic conferences in Asia perhaps. Then, in 2017 Autosave get a chance to apply for Art Gallery of Siggraph 2017 Bangkok, Bitech Bangna, and we were picked!.

JAM factory is an art space next to the Chao Phraya river in the heart of Bangkok. There are always events at the space, sometime markets, sometime concerts, and art exhibitions. A week or 2 after showing at Siggraph, we got an idea that we should continue to show somewhere else as well, and we asked JAM factory, they are happy to have us and we are happy to get the best location which is next to the river.

I will be focusing on how we made it. If you're interested in Siggraph or JAM factory, click the link.

Concept

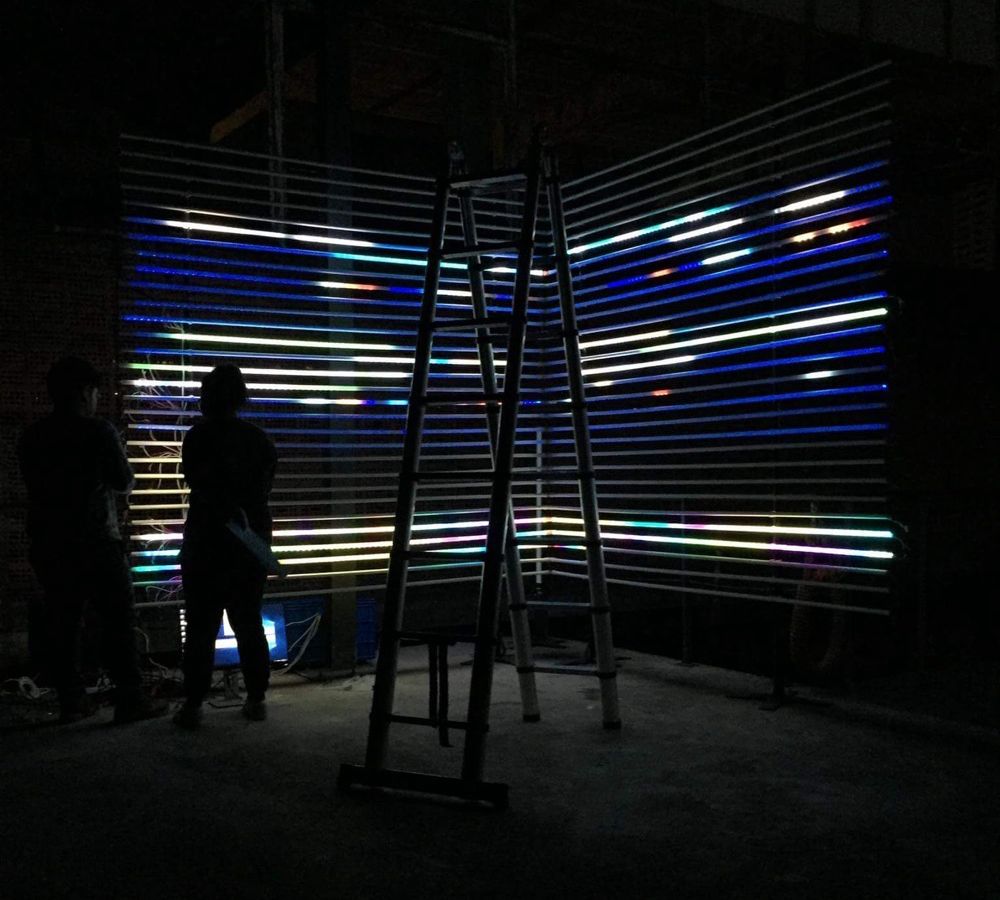

The theme of 2017 Art Gallery of Siggraph is Mind-Body Dualism. We focous on artwork that is able to interact with body movement. We already have >300m of LED strip in our office, so we decided to do something about it. We were thinking about dome structure, arch walking way. But the idea of having simple 2 sides of 3m-height-wall is really intriguing. Also the structure is 2.5+2.5m width which is the length of our led strip, so there is no need to cut the strip, we can reuse it elsewhere.

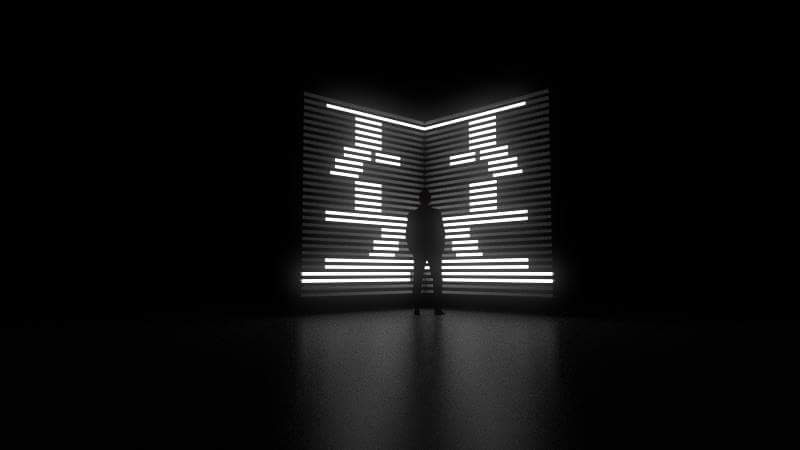

We are in fact already cyborgs, replicating ourselves as avatar forms online. Our cellphones and computers act as extensions of our own neural networks, imparting a boundless knowledge of facts and figures. B/W mind is an experiential piece designed to manifest the interconnection humans have with technology.

an explanation, my friend help me wrote it.

Technical term of B/W MIND, it is basically a 150m led strip interactive lighting installation, It collects audience movements and represents it in its own way. Autosave created it for Siggraph conference 2017 in Bangkok and then got a chance to recreate it at the Jam factory.

One day I got into a car accident, I crashed my car, smashing into a parked car. And my phone die from low battery, I feel useless at that moment, I couldn't call insurance or people I know because I don't remember any of the number. So, we are part of this machine, the machine(phone) acts as my memory. And, it could acts as my conscious, reminding what to do in a day. It could acts as my knowledge, it provides accurate information instantly. All this devices we rely on, make us... us. So, could we say we are somehow cybrog?

How does it works?

So this installation show that movement of our body can be calculated and transfer into digital signal and display on the LED strip. The installation is part of your body, mentally.

We use Kinect360 receiving depth of an audience, it is connected to a PC. The PC calculates the movement and then make visual. In the visual, we have 4000 dot agents, each has rule of its movement, so when the audience moves, dot agents act.

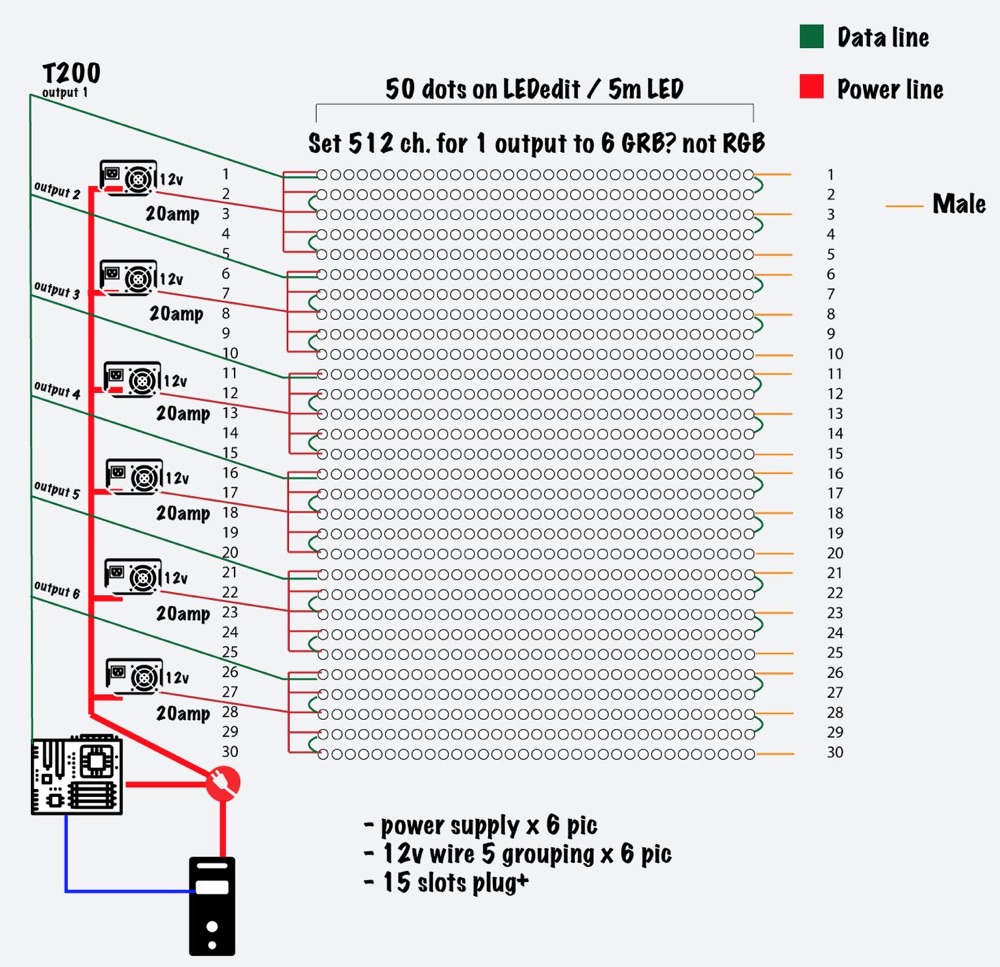

I wrote the visual software on processing, and use a t-200k led strip driver, only 1 driver. The driver connects to the PC via Ethernet using art-net protocol.

Here is part of the system diagram (lighting part)

Hardware

There are 5-meter of 30 strips. As you know, each LED strip have data direction. We use male JST SM connectors on head part and female JST SM connectors for the toe of each led strip, this make it easy for the team to identify direction.

We also use 5.5x2.5mm(or 2.1mm, can't recall) DC plug on the head of 15 led strips and toe of 15 strips. The green wire is the data wire, there are 6 groups each group have 5 led strips which connect toe-to-head. The red wire is 12v power, connected to each led strips head or toe. There are 6 of 12v power supplys. The orange wire is the power line that we did not use(but it was provided from the factory and we did not cut it off, because we can reuse the strips for other purpose).

Software

Kinect 360 - Processing have the ablity to connect to a kinect, tutorial and library here : Shiffman : Getting Started with Kinect and Processing. I use the code from the library and intergated with the 4000-dot-agents code I wrote.

T-200k - the hardware requires LedEdit (sadly only work on PC), but we found that 2017 version particularly works for T-200k. There is nothing special about this software, it just works straightforward, the screen mapping is easy but if you want to map special outline, it will not be the tool. The software requires specific ip address, we just have to config IP4 on internet protocol properties.

LED WS2811 - 5 of 5m (with 50 leds each) led strip is connected to T-200k on each output universe. We use 6 universe, we can do this using fewer number of universe but it's a lot easier for the team to connect same 6 module as shown on the system diagram.

Problems

There are problems need to be addressed for future version

Software aspects

- More fluid in the software, should move more responsive.

- After period of time the agents are moving out of the display area, it need to be reset. - This can be fixed easily.

Hardware aspects

- Using something more than

PVC pipefor structure, to make a better structure, and it will surely get more align with one another. - Hide wires, better wiring and electrical connectors.

Better improve

- Audio should be included, not just ambient. Interactive audio.

- New input - other than kinect360, we could perhaps use

360 degree camerawith image processing software. < I'm looking foward to this - Double the side, Create 4 side not just 2, turn it into a square room.